Designing AI-assisted pipelines across pre-production, real-time production, and post-production.

I approach AI as a systems problem, not a standalone tool. My focus is on where AI meaningfully accelerates or augments real production workflows, from previs and environment generation to iteration speed, consistency, and handoff between departments. Every experiment I build is evaluated against production constraints: latency, controllability, repeatability, and integration with existing CG and VP pipelines.

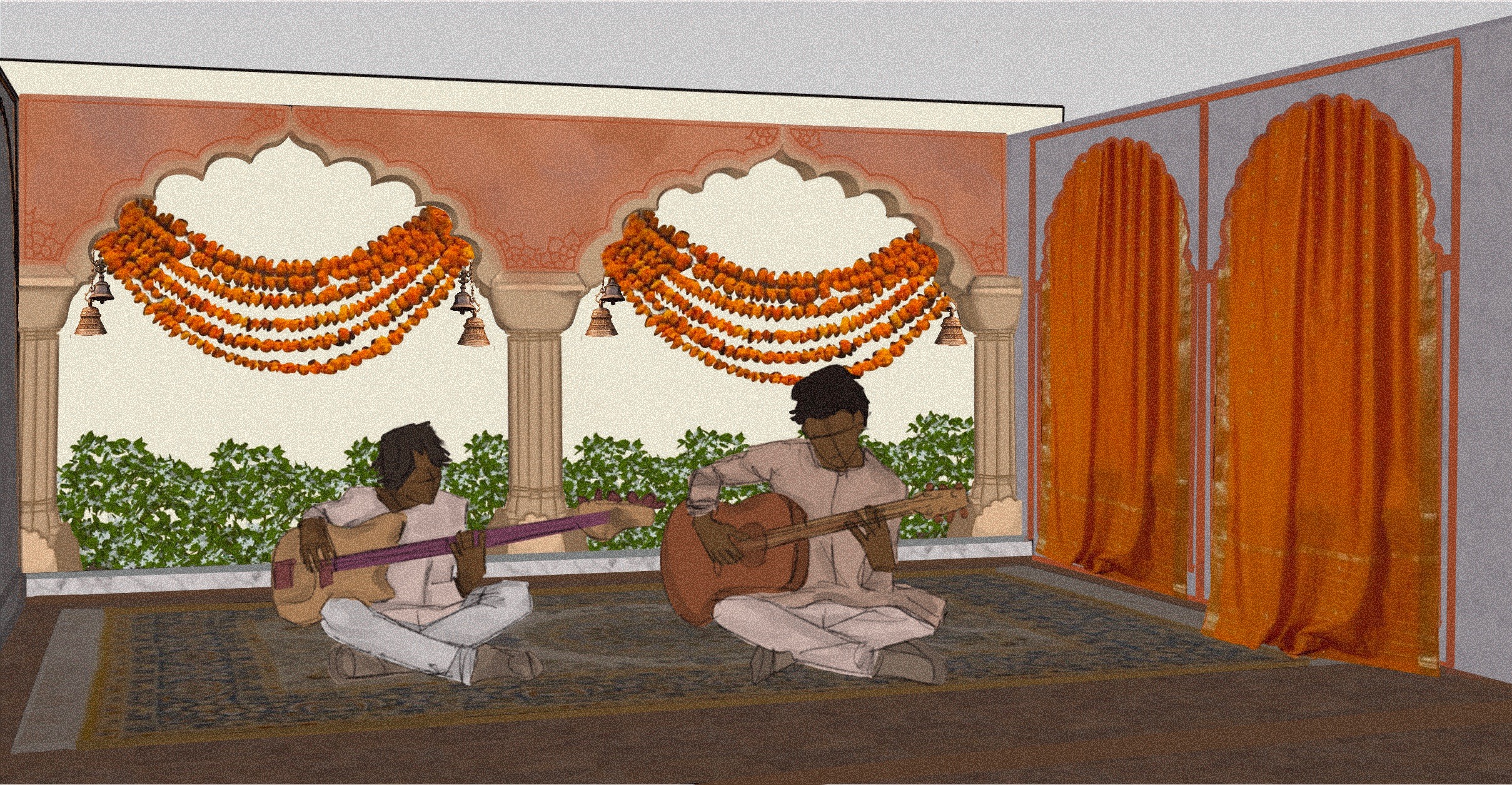

Directing and actively researching an AI-assisted virtual production pipeline aimed at improving previs and shot planning efficiency using limited real-world reference data. The system integrates Unreal Engine, LiDAR/photogrammetry, and AI-assisted Gaussian splat reconstruction (via tools such as World Labs) to convert small sets of stylized location imagery into metrically grounded, navigable previs environments. This workflow supports early-stage camera blocking, environment validation, and creative alignment across departments when traditional scouting or full photogrammetry is impractical.

Independent research into AI style transfer and consistency using custom-trained LoRAs and ComfyUI pipelines to understand limitations of AI outputs in production contexts.

Designed a scroll-driven image-sequence system for real-time visual storytelling on the web, optimized for performance, preloading, and perceptual smoothness.